Making AI systems safe, with mathematical certainty.

We are a non-profit organisation dedicated to formal methods and artificial intelligence research, committed to building technology for the safety assurance of algorithmic systems, for the benefit of all.

Our Mission

Ensuring safety is fundamental to the alignment and governance of algorithmic systems—especially when these systems interact with the physical world or environments where stakes are high and their decisions impact our lives.

Our mission is to build trust between providers, users, and regulators by assuring the compliance of AI systems with safety requirements. Because AI operates at levels of speed and complexity beyond human comprehension, we empower engineers to provide mathematically verifiable guarantees that these systems satisfy formal specifications of their intended behaviour.

Our Approach

We harness two complementary (but unfortunately often siloed) AI paradigms: machine learning—rooted in data and experience, extremely powerful but today not auditable—and automated reasoning—rooted in rules and logic, mathematically trustworthy but traditionally not as scalable.

Our approach makes learning and reasoning work at the service of each other, combining the best of both worlds. We build upon three technical pillars, which correspond to the phases of a new workflow for AI safety with formal guarantees:

- Formal Modelling (Pre-Learning): We empower providers, users, and regulators to establish precise, machine-checkable assumptions about deployment environments and formal specifications of AI system behaviour. These assumptions can be tailored to varying levels of detail and accuracy.

- Formal Certification (In-Learning): We facilitate the development of machine learning technologies that not only make decisions but also generate formal certificates attesting to their compliance with predefined safety and behavioural standards.

- Formal Monitoring (Post-Learning): We implement continuous monitoring systems to verify ongoing compliance with safety certificates and validate environmental assumptions post-deployment. Any deviations from expected behaviour are promptly flagged for attention.

When the consequene is harm, do you trust a test – or demand a guarantee?

For taking zero chances. (come on, this is too cheeky)

Our Team

Luca Arnaboldi

Luca is a computer science researcher specialising in cybersecurity, with a focus on safeguarding AI systems through rigorous verification. He leads efforts to make machine learning models demonstrably safe, grounded in real-world industrial applications.

Pascal Berrang

Pascal is an expert in the security and privacy of AI and blockchain systems and pioneered the concept of membership inference attack in ML models and co-invented ML-Leaks.

Marco Casadio

Marco is finishing his PhD at Heriot Watt University, his research interests involve verification and machine learning. More precisely, they involve enforcing logical constraints to neural networks through loss functions. His most recent work focused on ensuring robustness of NLP systems.

Marek Chalupa

Marek develops algorithms and tools for verification and monitoring of systems and led the development of the award winning software verification tool Symbiotic.

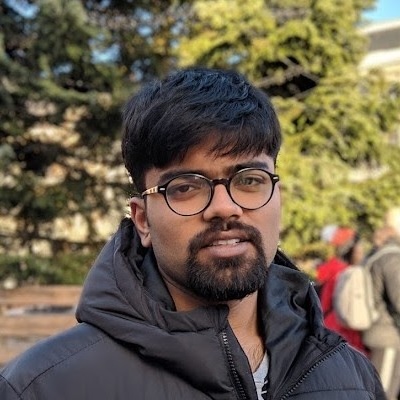

Abishek De

Anishek is an expert in model checking and proof theory. He has extensive hands-on experience with interactive theorem provers.

Mirco Giacobbe

Mirco specialises on the integration of machine learning and automated reasoning technologies for the formal verification of hardware and software and the safeguard of cyber-physical systems.

Edoardo Manino

Edoardo is an expert in neural network verification at the software and hardware level. He is an advocate for checking the implementation of AI systems He holds ARIA opportunity seed funding on the safety of closed-loop AI systems in finite precision.

Simon Schmidt

Simon develops technical AI-based solutions for customers. He obtained a PhD in mechanical engineering in computational mechanics, with focus on numerical simulation algorithms.

Ayberk Tosun

Ayberk is an expert in automated theorem proving with a focus on constructive mathematics in the foundational setting of Homotopy Type Theory. He obtained his PhD at Birmingham working in predicative pointfree topology.

Provide name, short statement & photo (headshot) for team, advisory board, partners.